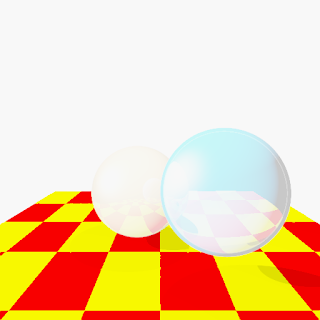

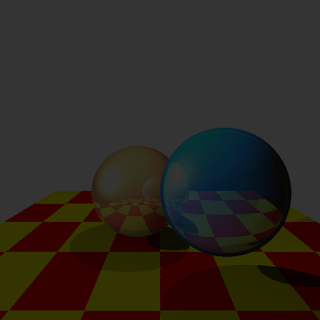

Tone mapping is the process of taking lighting values and converting those to display values. By using different equations we can get different effects in this process. The first three images below were done using Reinhard's model, using a constant key value but adjusting the maximum luminance.

The fact that these three images are similar has to do with the nature of the mapping here. The mapping equations attempts to convert the lighting units to a fixed luminance level for display. In essence, since the entire scene has the same lighting contrast in each of these images (just different absolute lighting levels) they all map to similar display units.

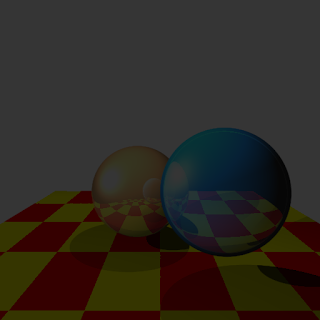

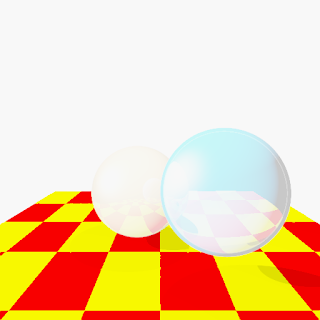

The image below adjusts the key value to create a brighter image. The lighting units here are being converted to brighter display units thanks to a higher key value.

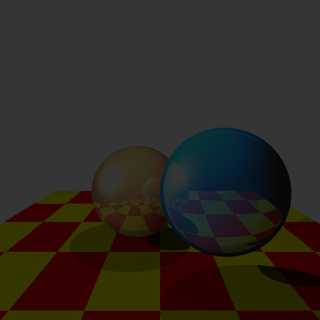

Finally, we see an even brighter image below. This image used a very high key value which created an over-exposed look. Key values can either be manually selected, or they can be pulled from the image itself. A pixel position may be used and the luminance key read from that pixel position.