It is time to start making our renderer look at least a little more realistic. We need to put in lighting, since the interaction of light with surfaces is the most basic factor in what we see in the world.

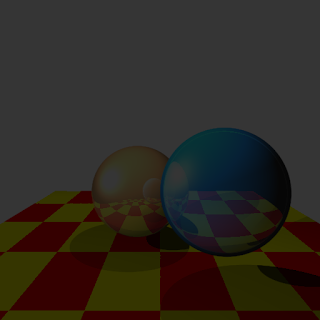

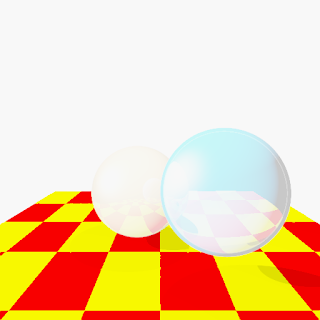

Like most rendering systems we will implement the classic Lambertian + Phong lighting model. This adds diffuse and specular light. The idea behind splitting lighting into two parts enables us to handle some important phenomena in a way that is far more performance friendly than the unified lighting model. Diffuse light is smooth shading that appears on objects which have any roughness to them. In reality almost all objects have some roughness which causes diffused illumination, and most objects are mostly diffusely lit. Glossy objects exhibit specular highlights. This is the effect where a glossy object will appear to have a sharp highlighted area. Putting these two together yields an image like below.

A note should made about the shadows. In rasterization systems like those used for real-time rendering shadows are a thorny issue. They must be handled with special algorithms. In a raytracing engine such as the one here, the shadows are handled incredibly elegantly. When a surface is hit, a ray is cast from the hit point toward the light. If it hits a surface on the way then we simply don't light the surface. Now, if we were to talk about creating soft shadows (a side effect of using more realistic lights which have actual area instead of being infinitely small points)...

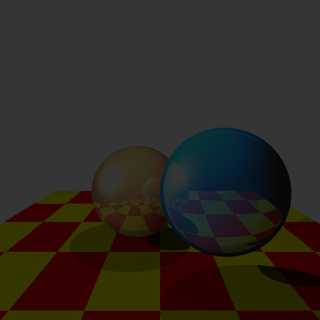

Most scenes look rather flat being lit by a single light. Surface in the real world are often lit by many light sources (amongst lots of other lighting phenomena). Lighting from multiple light sources creates a much better effect. Using a second light from above yields the image below.

The effect of the scene is far better than before.

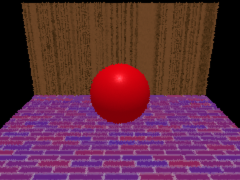

Multiple lights are all good, but another problem is that every object uses the same lighting equations. Many things can be done with the standard phong lighting model, but objects in the real world often exhibit far more complicated lighting effects.

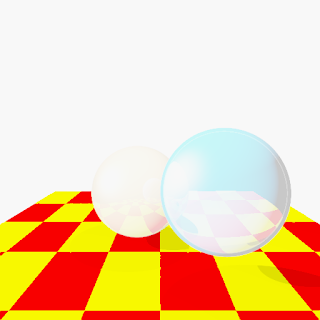

A simple test of multiple lighting models is to add an easy-to-implement second model. The model I chose is called the

trilight model. The idea behind this lighting system is to take three colors and use the existing values calculated for the phong equations and combine them in a new, flexible way. This can create back and rim lighting effects easily, by tweaking the three colors of the material. The previously blue ball is shown below with a red rim lighting effect. What this means is that the rim of the ball which is perpendicular to the light will have a red tinge to it.

In the future, much more complicated and realistic lighting models can be used (oren-nayar, for instance, or ward). Texturing is coming up, and that will add a lot of possibilities for more realism, especially with our ability to swap in new lighting models.